Enterprises Must Consider SLMs for GenAI

Small language models (SLMs) specialize in GenAI operations, having a smaller blast radius, and use less compute that their larger siblings, LLMs.

Wait, what? I think there’s a typo in that headline, it should be LLM, or large language model. After all, that’s what everyone is talking about.

LLMs are the driving force behind today’s GenAI hype. Trained on billions or trillions of pieces of data context, these models can do everything from giving you advice on your current mood to helping you discover new types of dad jokes. And why it’s really cool for the average person interfacing with OpenAI’s ChatGPT, Anthropic’s Claude, or any of the thousands of LLMs available right now, as a business you may want to reconsider a LARGE language model in favor of a small one. An SLM (small language model)

LLM vs. SLM

So let’s go back; wayyyy back. Back to the suit-fitted, dress attire of yesteryear. Back when huge banks of computers filled rooms and floors as a single entity that was accessed through retro-futuristic terminals. My favorite concept of this? The original Tron:

The ‘Master Control Program’, MCP for short, was the AI villain that controlled and assimilated other programs from across the world for Encom. It naturally spoke with humans and was eventually felled by Tron, a security program that ended the MCPs reign.

Ahhh, Sci-fi.

Modern LLMs aren’t that different than the MCP they’re all-knowing and centers of massive amounts of machine-trained data, pre-canned and tuned to naturally convey information as you or I would in a casual conversation.

Also like the MCP, an LLM can be a security and privacy risk when tied to business data and applications. When connected to these components, an internal or external threat actor can coax an LLM to forgo its guardrails or query sensitive data.

When Flynn, the rightfully disgruntled ex-employee, forged access to the MCP through current employees, he was whisked away to a digital world inside the mainframe. Though not as profound, unauthorized access to critical company IP (intellectual property) is made to be just as easy with an LLM using GenAI (generative AI).

So remember like, 200 words ago about that term SLM? Imagine if Tron’s premise was an AI that only had access to, say, laser schematics and data to improve the digitizing of matter. Nothing extra. Ok, maybe some dad jokes, because as a laser technician I’d love some mid-day funnies:

“Why did the digital chicken cross the road?”

“…”

“It was about to become a chicken bite”

Ok, that was bad, but I think you get it. Our ‘Laser Chicken SLM’ specializes in a very focused dataset and task: Understanding Encom’s laser schematics and telling laser dad jokes. This smaller attack surface doesn’t prevent someone like the MCP from compromising its information, but what IS compromised is much less than a large model. Also, an attacker would have to compromise other, adjacent SLMs to get deeper context or secrets. When you separate AI models, this provides better security hygiene and makes model management more aligned to knowledge buckets or tasks inside a business rather than one large model for everything.

The GenAI Edge with SLMs: Less Power, Less Hardware, Less Headaches

AI models are the result of huge amounts of data and processing power (compute) trained and compiled for millions of GPU hours. Accessing this trained data also takes large amounts of computing on the user side. When you run a chatbot for example, it has a cost to interact with an AI model; a compute operation to query or infer using a GPU card, or a newer CPU with AI capabilities. Models can only handle a certain number of words or characters at a time. These are called tokens. The combination of compute and tokenization costs may show up to an end user as “AI slow down” where a question may go unanswered, time out, or not function. Nothing is free.

The combination of compute and tokenization costs may show up to an end user as “AI slow down”

These days many users of AI are not close to AI compute operations. Most are remote at the network edges on a myriad of devices performing an array of tasks. Sure, a company could spend large amounts of capital on compute resources for AI, but why? With SLMs and private cloud platforms designed for the edge, you can save money, time, and resources.

Imagine you’re a hybrid legal worker whose expertise and main role is to identify and correct incorrect language in business contracts. You paid for OpenAI ChatGPT and turned off prompt sharing, but a new mandate stipulates you must use internal AI resources to protect company IP.

Ugh. What are you going to do?

Enter the SLM. Small, specialized, powerful. It is 1/10 the size or even smaller than a typical LLM. It can run locally on your MacBook or on a slimmed-down edge server with optimized GPU hardware or even GPU-less with AI CPUs that include powerful natural language processing extensions.

You can use an SLM to help a legal professional find and understand duplicate contexts and problematic language, or trigger a workflow to bring in other people simply based on a keyword.

Your IT dept may even need to allow you to be part of the testing for these SLMs. But a series of tickets and back-and-forth communication can be clunky. Imagine a world where a few clicks can find, swap, and implement a new SLM. A good starting point to find SLMs is a list like this on Hugging Face.

Still, the challenge remains how quickly IT can qualify in, or qualify out, a particular model. This is where a private cloud platform with deep AI roots and self-service comes to save the day.

A Private Cloud Data Platform; Nutanix GPT-in-a-Box 2.0

Ok, ok, this is a plug but hear me out.

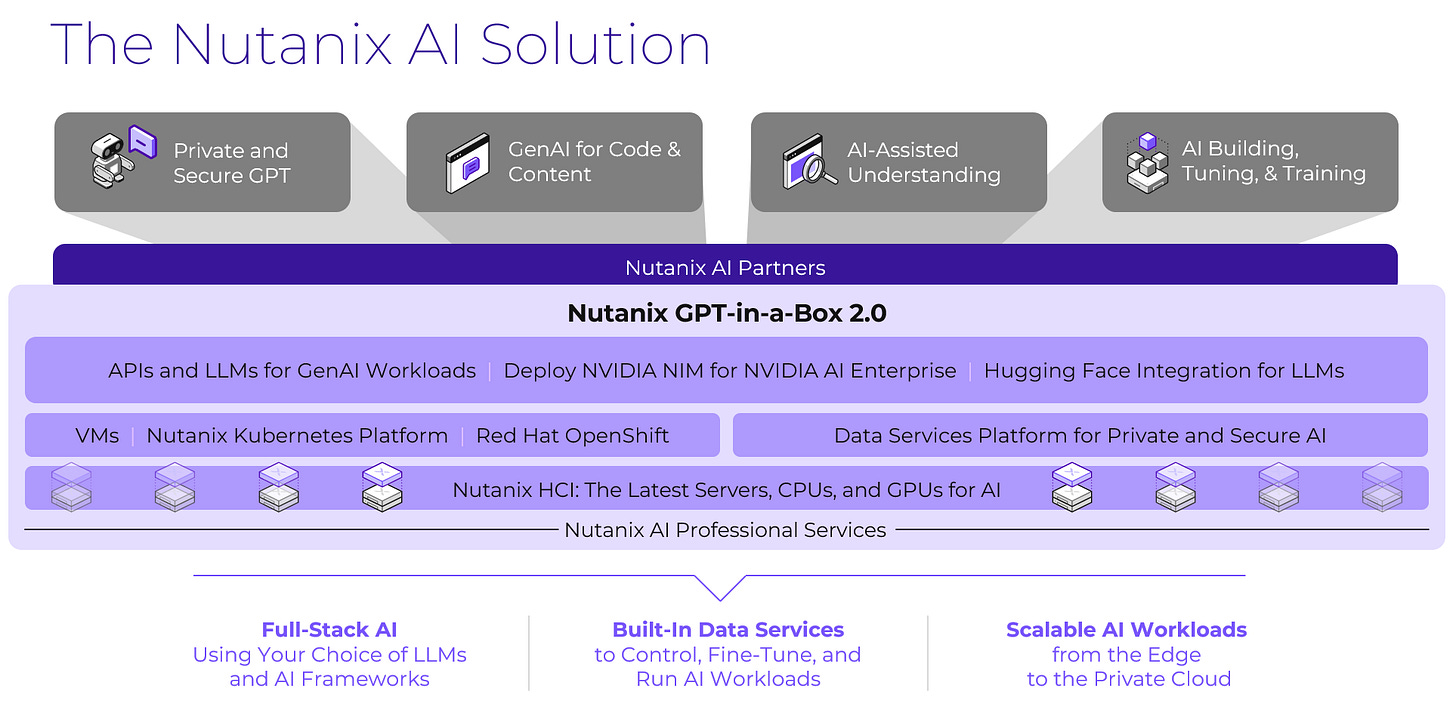

AI for your business is much more than GPUs and the latest LLM; It’s about data, lifecycle management, and enterprise-scale; It’s about private and security operations that are identical whether you’re on-premises or in the public cloud; It’s about making your IT wizards into AI wizards. Most importantly, it’s about choice: The choice to deploy something today without fear it will be obsolete tomorrow and the ability to adapt, to evolve.

Nutanix was born on the notion that a resilient and scalable data platform was critical to a business. It’s since evolved to spread this message beyond the datacenter into public clouds. The explosion of AI only reinforces this idea: You must control your data; no small print, no cloud contracts, no promises, just good old-fashioned ownership.

The explosion of AI only reinforces this idea: You must control your data; no small print, no cloud contracts, no promises, just good old-fashioned ownership.

Data control now becomes the deciding factor for GenAI. In reality, most organizations will not create their LLMs or SLMs, but use an open-source model or pay for a specialized model from a trusted vendor. This means deploying an AI app should be easy, secure, and interchangeable. It should be simple.

Nutanix GPT-in-a-Box 2.0 is a private cloud solution that includes your choice of hardware, software, and data services for GenAI; Think of it like a box of everything you need to run AI applications including GPUs, CPUs, memory, storage, networking, and a single pane of glass dashboard to control it all. Now imagine you can combine these boxes into clusters of servers across data centers or clouds. What you enact or change in a single location also changes everywhere else.

Cool, right?

But what about GenAI itself? You know, the model management, development, and testing? An LLM/SLM isn’t enough. There’s probably no budget to find ‘AI specialists’. Heck, even if you did, these days it would just be someone like me who has used AI enough to be dangerous :-) There must be a way to retrain technologists into AI experts, and with GPT-in-a-Box 2.0 there is.

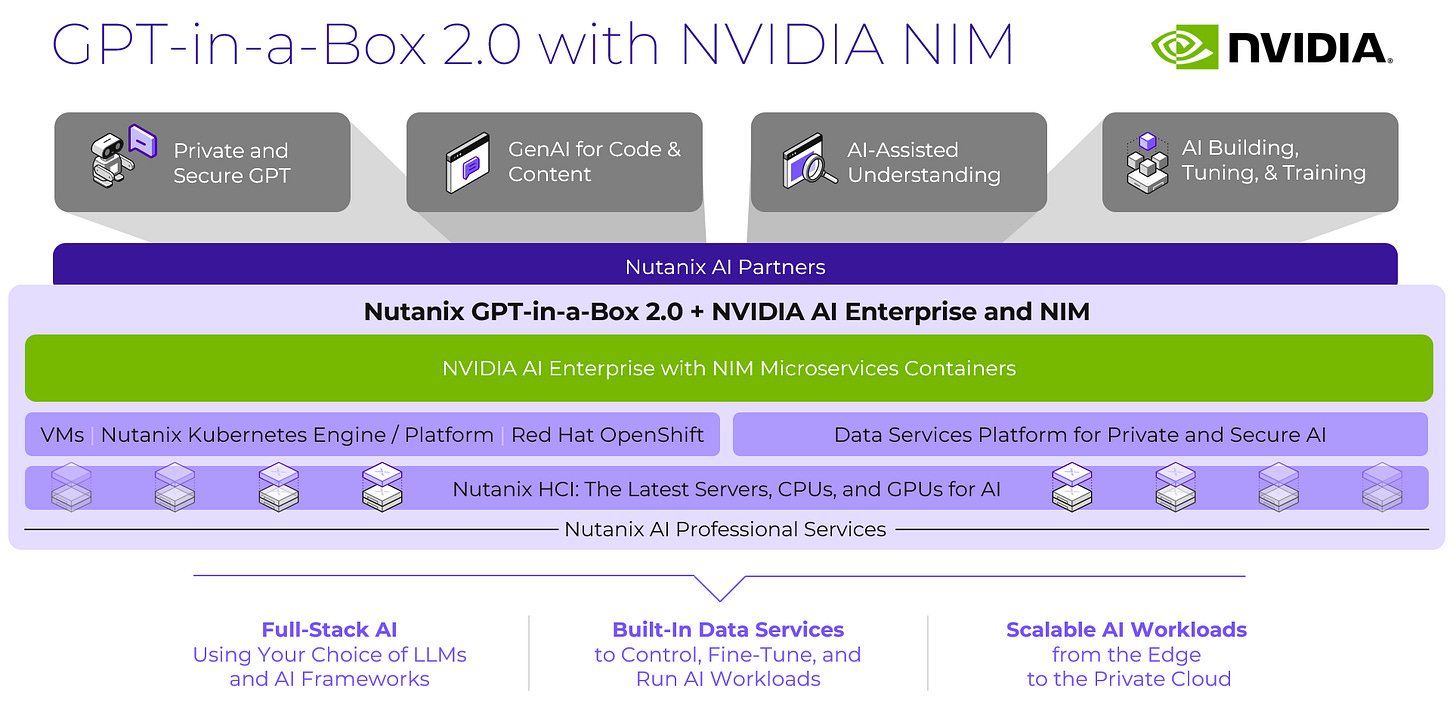

Nutanix AI Endpoint and NVIDIA NIM

Nutanix AI Endpoint will be a native container-based workflow ‘engine’ that allows you to search and download validated LLM/SLM models from HuggingFace, or upload your own model. An API creator is built in that, within a couple of clicks, you can tie an enterprise-grade API to your model. Not the right model? No worries, simply remove the connection, download a new model, and tie the same API to the new model. Pretty slick.

For NVIDIA AI Enterprise users, the Nutanix AI Endpoint’s engine is under development and will be able to be ‘swapped’ for NVIDIA NIM, a set of containers that create a microservices infrastructure based on NVIDIA’s latest offering. Similar to the native engine in the Nutanix AI Endpoint, the option to run NVIDIA NIM provides a choice depending on your business requirements or outcomes.

And this is just the beginning.

Future engines will be able to be swapped into Nutanix GPT-in-a-Box 2.0 providing you exactly what you need to make GenAI yours.

Back to our legal user example from before-

Now imagine if your IT dept had GPT-in-a-Box 2.0 with AI Endpoint. Testing SLMs would be easy, seamless, and straightforward. Not the right model? Open source license constraints? Just grab a new model, repoint, and test. It’s painting a future of GenAI model management for large, small, or whatever comes next.

Testing SLMs would be easy, seamless, and straightforward. Not the right model? Open source license constraints? Just grab a new model, repoint, and test.

Are SLMs the Future of Enterprise AI?

Surprise, the answer is yes, AND no.

Enterprises must consider the diversity of problems GenAI could solve and balance that with what should be tackled. SLMs are a great solution for exploring AI as a way to improve the little things.

Again, with our legal example, providing AI as a tool, not a replacement, will enhance user productivity. An SLM is an impactful way to validate AI without a heavy capital investment in hardware, software, or talent. Partnering with a trusted vendor that ensures your success unlocks this goal. Nutanix provides solutions from the edge to the public cloud, allowing you to buy exactly what you need today and expand tomorrow when needed. Imagine getting an SLM up and running in minutes with all the data and workflows in your control. Thats Nutanix.

AI is Changing, So you Should Too

What you’re doing or even conceiving of today with GenAI WILL change, and will do so soon. We’re at the very beginning of its impact on business. The risk is choosing a solution or a direction that may not be supported or even exist, tomorrow. Yet waiting for things to shake out isn’t an option either. The advantages AI brings are too impactful. So what do you do?

Find a solution that enables choice, one that can adapt. Focus on:

A strong but upgradable hardware platform

A software-defined approach for data and applications ensuring they can operate anywhere

A platform where AI isn’t just a focus, it’s the reason

Flexibility is key when the outcome of a journey is unknown, and GenAI has just charted a path for us all.

But, a word of caution: AI shouldn’t change everything.

The goal of any GenAI initiative should be to augment the human aspect, not replace it. AI, like any new and advanced technology, will undoubtedly replace some of the jobs humans do today much like centralized hearing replaced chimney sweeps, or automated phone line switching replaced switchboard operators. Overall, the majority of jobs can be aided by leveraging AI to automate mundane tasks leaving humans to do what they do best- imagine, create, and thrive.

End of line.

Learn more about Nutanix, GPT-in-a-Box, and NVIDIA at nutanix.com/ai

Disclaimer: These thoughts are my own and not reflective of anything related to Nutanix.